Which Test Should I Write? A Strategic Guide to Front-End Testing

A Deep Dive into Front-End Testing: Learn What Mistakes Turn Tests into a Burden and How to Apply a Different Approach for a More Effective Testing SuiteSo, which test should I write?

If you’ve ever thought about this question, you likely heard about one of the many testing models that exist: Testing Pyramid, Testing Cone, Testing Trophy, Testing Honeycomb.

I’m not going to cover any of these models in this article. In fact, I suggest you stop thinking about them altogether!

Instead of focusing on applying a subjective testing model that likely doesn’t fit your use case, we’ll focus on how to understand the benefits and costs of a test.

By understanding the tradeoffs between different types of tests, the questions of which testing model to choose from and when to write a test will fade - you will know which test to write and when to write it based on the actual value you’re looking to get from it.

You will also understand how to write tests you can maintain in the long term - as writing the tests is just the first step, the much harder part is maintaining them and making them useful.

Let’s dive in:

1. How Tests Can Go Wrong

In my experience as a Full-stack Developer I’ve experienced tests from two completely opposite sides:

Testing Hell

Almost every piece of code you write breaks your tests. Most times the tests break, it’s not because of a real failure but because the tests needs refactoring to match the code changes.

Time spent on developing features increases, as each feature warrants fixing the old tests that fails and adding a new test to match the high coverage requirements.

Every once in a while the tests catch a bug, so you keep them, but you dread working on tests and fantasize about deleting all of them entirely.

Testing Heaven

The tests work like clockwork and when they fail - call your immediate attention. More often than not, the tests fail because of a real issue that needs to be fixed.

Time spent before on testing regressions manually is saved, because you trust the tests to find bugs.

You are eager to add more tests after every bug fix and for every feature, because you know they saved you from making a big mistake, multiple times, and have proven themselves useful.

The tests offer a safety net, and save time. Though they take more time to develop initially, development goes faster because of the reduced time spent on bugs and manually testing regressions.

Product quality is also higher, and tests become something that you feel is a necessity.

Same Tool, Different Results - Why Outcomes Vary

How come that using the same tool (tests), we can have two completely different results? One where tests are a pain, and one where tests are complete life savers? It’s the same tool, after all.

The reason is that tests have a price!

Because tests are a recommended practice, developers sometimes treat tests as something that is always beneficial, not realizing that when taken too far, or implemented in the wrong time/place/way the price will be bigger than the benefit.

This is why it’s important to understand the tradeoffs of a single specific test. It makes the cost and benefit of each test visible, giving you the ability to avoid the ‘testing hell’ scenario, and the tools to pave a way to testing heaven.

2. The Hidden Cost of Tests

When you understand the cost of your test in the long-run, you’ll be able to identify the scenarios where tests actually hurt your efforts more than help them.

When it comes to the cost of a test, there are many factors involved - from the time it takes to write tests, to execute them, to monetarily costs that occur when testing.

Taking these parameters into account is a great idea - but using them as guidelines, not as much.

Many developers use these parameters to guide their testing efforts, instead of looking at them as existing boundaries which can be expanded and changed.

For example, because UI tests take long time to execute, developers might not invest time in them - instead of running them in parallel so they run quickly.

This is why we’re going to focus on a different cost - and I argue that this cost is far more impactful than any other ones:

The Cost To Change Code

It’s inevitable that as requirements change and new features are added, they warrant new code changes. Depending on which tests you have, these changes may break your them.

When it happens more often than not, it leads you to the ‘testing hell’ scenario I described in the beginning, and this is what I call the cost to change code.

To be clear, this is not about situations where the test fails because it finds a bug, which eventually saves time. This is about situations where the test breaks because of the natural evolution of the codebase, and requires high maintenance just to keep working.

This is more often than not the differentiating factor regarding whether the tests will be a useful safety net, or shackles you have to carry.

The thing about this kind of metric is that it can’t be measured easily, as the rate of change to a codebase is highly situation dependent, and it affects the cost to change code directly.

It’s also not a black or white mentality where either your tests break when you change code or not. Sometimes business logic changes, or UI changes, and you will have to fix broken tests.

It’s about how many times it happens - maintaining some sort of balance, and whether overall, the experience that you get from the tests is that they offer more value than not.

Understanding Coupling and Test Scope

In essence, the cost to change code can be identified by how much your test code is coupled with your code.

To understand the coupling level you want to identify the areas where your test code connects to your actual code.

If your test code calls some code, via a function for example, you are now coupled to that function. When the interface of the function changes, or if it becomes obsolete, your test will need to be updated accordingly.

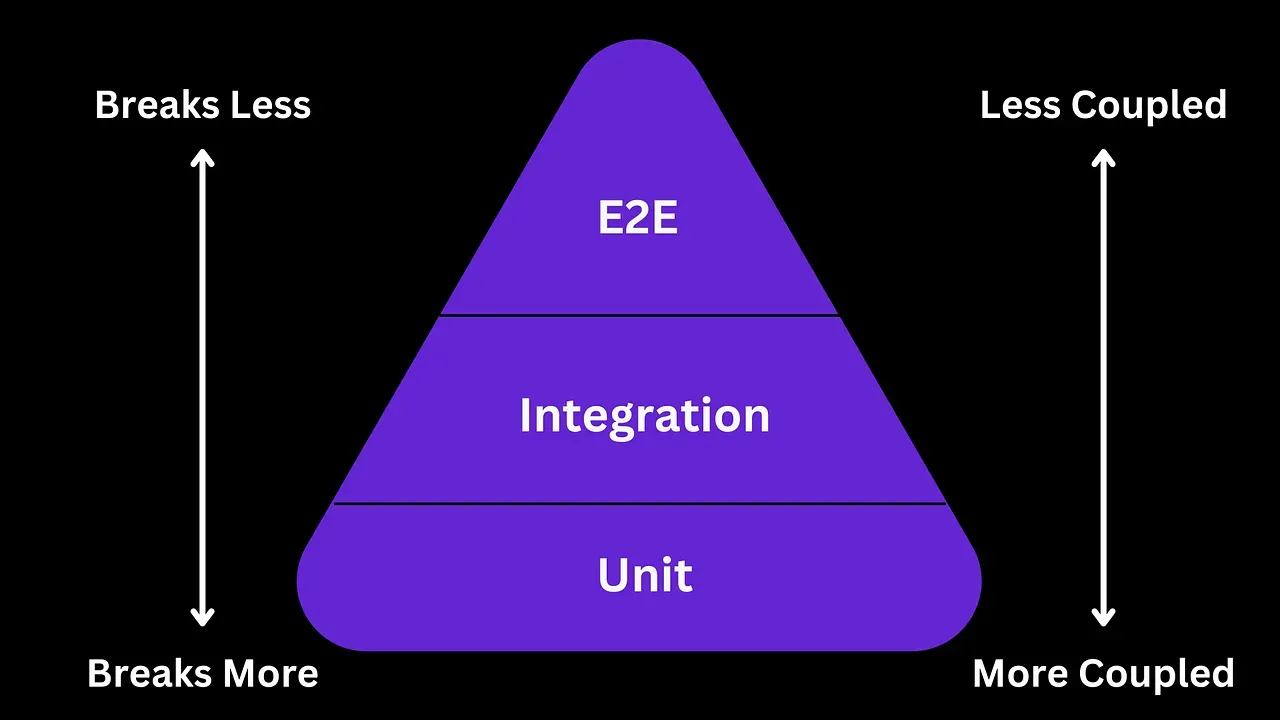

If we look at common testing definitions (unit, integration, e2e) - the highest coupling is in unit tests, with less coupling in the integration tests and e2e tests:

The classic testing pyramid, depicting the overlooked cost of change, and how it affects different type of tests

These definitions (unit, integration, e2e) are subjective and vague, so what is it about them that makes one more coupled than the other?

The answer is the test scope.

The test scope is what happens behind the scenes - what you’re actually testing. It could be a single function, a flow of functions, or an entire flow of front-end code as well as backend-services that query a DB.

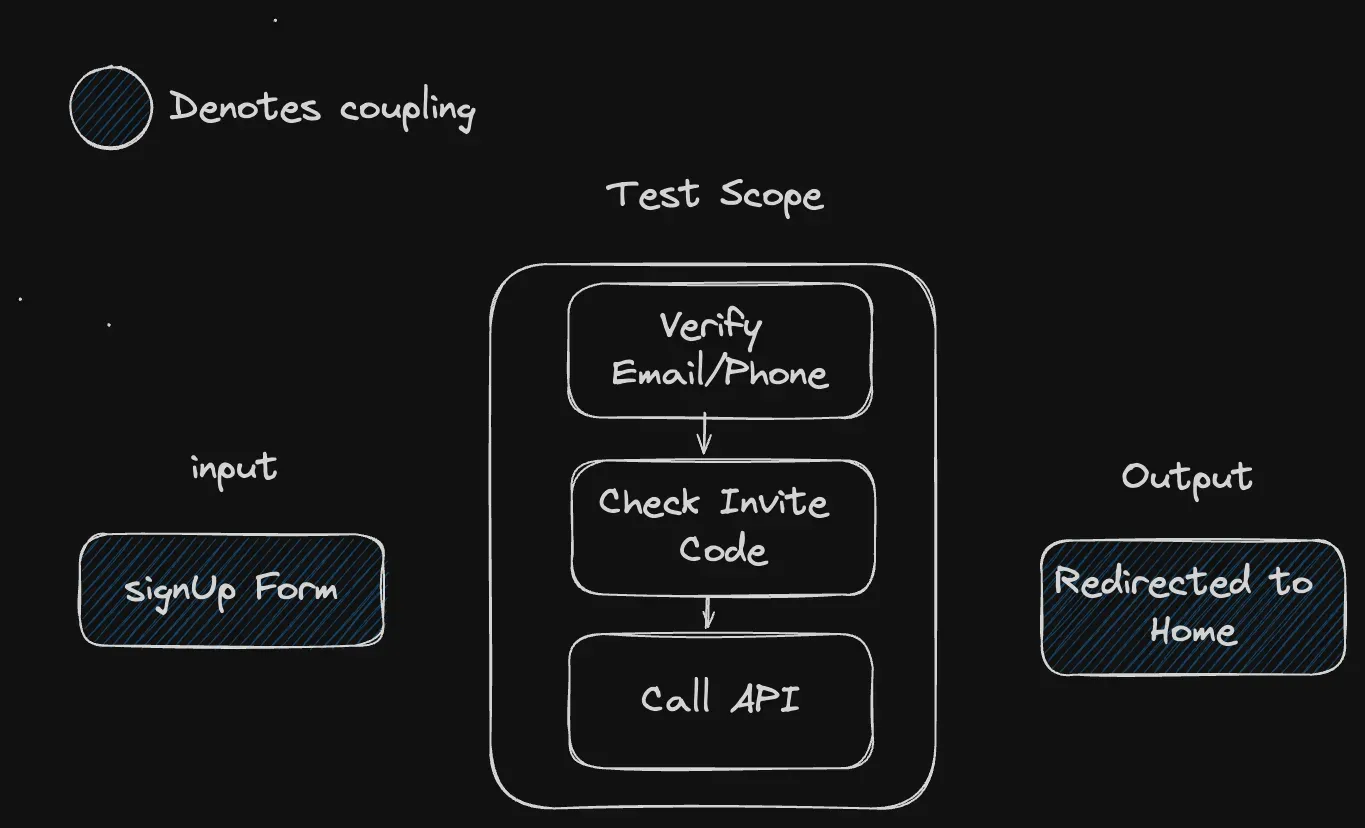

The larger the test scope, the less coupled the test is to it. This means that the scope could change and the test will not break, as long as the input and the output stay the same.

An example of a signup test, as long as the input and outputs don’t change (the coupled points), the test will keep working

A few more examples of this:

-

The input of UI based tests is a UI element, and the output is some result on the screen. You can switch complete framework from Angular to React and the test still will not break, as you’re only coupled to the UI and the test scope is the entire code behind it.

-

If you’re testing the implementation details such as the functions behind the UI code, changing those implementation details could break the tests even though the UI may still work fine.

-

A UI integration test that mocks network requests will break when the data model of the API will change, as you’re coupled to the API implementation. A full E2E test that doesn’t use mocks will not, as its test scope includes the API implementation.

When it comes to cost to change code, tests with narrow scope are more coupled to test code, and you will have to update these tests more frequently as code evolves.

On the other hand tests with large test scope will only break when the coupled input or output changes, and can mostly keep working without requiring fixing, even as implementation changes.

3. Choosing The Right Type Of Test

Before discussing the tradeoffs between different type of tests, we need to define basic terminology.

I tend to categorize Front-End tests into two general categories:

-

Implementation based tests - usually categorized as unit or integration tests, these tests interact with your code directly.

-

UI based tests - these tests are primarily interacting with the UI, sometimes having minimal interaction with some implementation detail, for example intercepting network requests for mocking purposes.

It’s clear that UI driven tests are much less coupled than implementation based ones, since they don’t rely on implementation, rather on a UI elements instead, which change less frequently.

But this doesn’t mean that they are better! Here are some examples where implementation based tests are more effective:

Testing Feature with Lots of Variants

The fact implementation based tests have narrower testing scope means they are more isolated, and take less time to execute.

If you have a form that has 50 different variations, testing it only in UI tests will take long time to write, to execute, and extend.

The isolation you get in implementation based tests will allow you to quickly write tests for different scenarios.

Implementation based tests can be taken a step further and using techniques like property based testing, that make it possible to easily test scenarios with highly dynamic inputs and outputs with a single test, saving dozens or more of potential UI tests.

Testing Complex Implementation

Testing implementation isn’t a bad thing. If you have a complex algorithm, perhaps you’d like to have an additional test which verifies the algorithm steps were executed properly.

In cases where testing the output doesn’t necessarily mean what you’re testing works, testing the implementation can provide additional safety.

4. Real World Examples

Making strategic testing choices is about understanding the value the test brings compared to the cost the test incurs.

Advice such as “write mostly unit tests” or “write mostly integration tests” can be harmful and lead you to the testing hell scenario.

Let’s look at a few scenarios and see how different real-life requirements affects the choice of what test to write:

Testing a feature that’s about to be refactored

If a feature is going to be refactored, writing mostly implementation based tests is a recipe for disaster, as change frequency is high, these tests are going to break often.

When refactoring, you know many changes will happen which is why it’s better to cover it mostly with UI tests.

You can still write some unit tests where you want to test a complex implementation, or scenarios with multiple variations, yet it’s important to do this with the conscious choice that these will likely get refactored later.

Testing a new feature

You wrote a new feature and want to cover it with tests. Some features functionality are mostly happy paths and can be covered with a few good integration tests and E2E tests.

Some features may warrant more implementation based tests to cover variations, or complex implementation that needs to be asserted.

Avoid the mistake of adding implementation based tests to a feature when there’s UI tests that already cover the same test scope, creating double coverage just for the sake of testing.

Testing an old feature

If a feature gets changed a lot, adding tests can be a good idea to introduce more safety when these changes happen. In this case, it’s useful to think about this scenario as the same as writing a new feature.

If code never gets changed in that area, or if it’s very stable, it’s more effective to start with places where the tests will have higher impact.

5. Summary

To answer the question of which test to write, you want to consider the benefits and cost of a specific test, rather than trying to implement some generic testing distribution model.

While it’s important to take factors like how long it takes to write and execute a test into account, the most important thing is whether your tests provide value, which is highly dependent on the cost of change the tests create.

We looked at how to understand the cost of change by looking into the coupling the tests create, and analyzing the test scope to determine how much is covered by a test.

We also talked about different factors that come into account when picking which test to write, such as complex implementation, or features with high variations.

Ultimately, choosing the right test is about making tradeoffs specific to your use case. Sometimes it’s worth creating a lot of highly coupled tests to reduce risk, and sometimes it’s not worth it to write a lot of tests at all, and a few simple ones could suffice.

The more you practice considering all relevant tradeoffs, the better you’ll become at identifying a useful testing strategy.